Hey,

close to a year ago I got in touch with concourse.ci when evaluating a tool to perform builds whenever changes happened in a git repository and even though the team I worked with end up creating a custom tailored system (we needed much less than what concourse - or something like Jenkins - provided) I think Concourse does a lot right.

This post is not meant to teach you the concepts behind Concourse or provide use cases, but let you know how you can run a development version of it locally if you’re willing to contribute to the project.

Setting up the development environment

Concourse (and the CloudFoundry project as a whole it seems) performs vendoring using git submodules.

There’s always a repository somewhere that aggregates dependencies and gives a consistent view of how things group together. In the case of Concourse, it’s github.com/concourse/concourse.

To the extent of this post there’s no need to run all the parts of Concourse (as we’re only exploring atc and PostgreSQL), but before breaking things apart, I think it’s good to get an example running.

Pick that repo and put it somewhere in your FS (doesn’t need to be under your current $GOPATH) and then initialize the submodules (if you have any doubts, check the CONTRIBUTING.md file at this repository):

# clone the repository that aggregates the whole

# project and its dependencies

git clone https://github.com/concourse/concourse

cd concourse

# initialize the submodules

git submodule update --init --recursive

# if not using direnv (https://github.com/direnv/direnv),

# execute the commands described under `.envrc` file in

# the current shell session (that `.envrc` is essentially

# setting the GOPATH go the `./src` directory under

# concourse/concourse.

source .envrc

With that set, I update the ./dev/Procfile file which describes how to run the dockerized version of the postgres database and make atc point to the DB instead of a local instance:

--- a/dev/Procfile

+++ b/dev/Procfile

@@ -1,4 +1,4 @@

-atc: ./atc

+atc: ./atc-dockerdb

tsa: ./tsa

worker: ./worker

-db: ./db

+db: ./dockerdb

With that set, start:

./dev/start

Using default tag: latest

latest: Pulling from concourse/con...

Digest: sha256:ac10eadf32798da0567...

Status: Image is up to date for co...

10:21:25 atc | Starting atc on ...

10:21:25 tsa | Starting tsa on ...

10:21:25 db | Starting db on p...

10:21:25 worker | Starting worker ...

10:21:26 db | 2939be72c6e24ddb...

10:21:26 tsa | {"timestamp":"15...

10:21:26 db | Terminating db

10:21:26 worker | {"timestamp":"15...

10:21:28 atc | creating databas...

10:21:31 atc | creating databas...

Using FLY locally

To interact with the Concourse API (provided by atc) we use the fly command line interface (CLI). It’s the piece that makes use of go-concourse to communicate with ATC in a standard fashion.

Installing it is simple after all submodules have been initialized. Head to concourse/concourse/src/github.com/concourse/fly and build it:

# Make sure you have your `GOPATH` properly set by sourcing

# the `.envrc` file

source .envrc

# get to the directory where the source of the FLY command

# line is.

# This should be there after you've installed the submodules.

cd ./src/github.com/concourse/fly

# By using `go install` we'll have the final result of

# the build in `$GOPATH/bin` which should be in your

# $PATH after sourcing `.envrc`.

go install -v

# check if everything is working

fly --version

0.0.0-dev

With the CLI can now create a target that we’ll use to perform the commands against:

# Authenticates against a concourse-url (`127.0.0.1:8080`) and

# saves the auth details under an alias (`local`) in `~/.flyrc`.

#

# As we're not specifying a `team` (`--team-name`) it'll use the default

# one (`main`) - a team can be thought as a namespace under which pipelines

# and builds fall into.

#

# When testing concourse locally (and not dealing with auth) we can stay

# with `main` (which is also an administrator team) and not worry about

# auth at all (`atc` is being initialized with the `--no-really-i-dont-want-any-auth`

# flag).

fly \

login \

--concourse-url http://127.0.0.1:8080 \

--target local

# Create a pipeline

echo '

jobs:

- name: hello

plan:

- task: say-hello

config:

platform: linux

image_resource:

type: docker-image

source: {repository: alpine}

run:

path: echo

args: ["hello"]

' > /tmp/hello.yml

# Submit the pipeline

fly \

set-pipeline \

--pipeline hello-world \

--config ./hello.yml \

--target local

# List the pipelines to make sure it's there

fly \

pipelines \

--target local

name paused public

hello-world yes no

Ok, everything is set up!

Running the pipeline from concourse web application

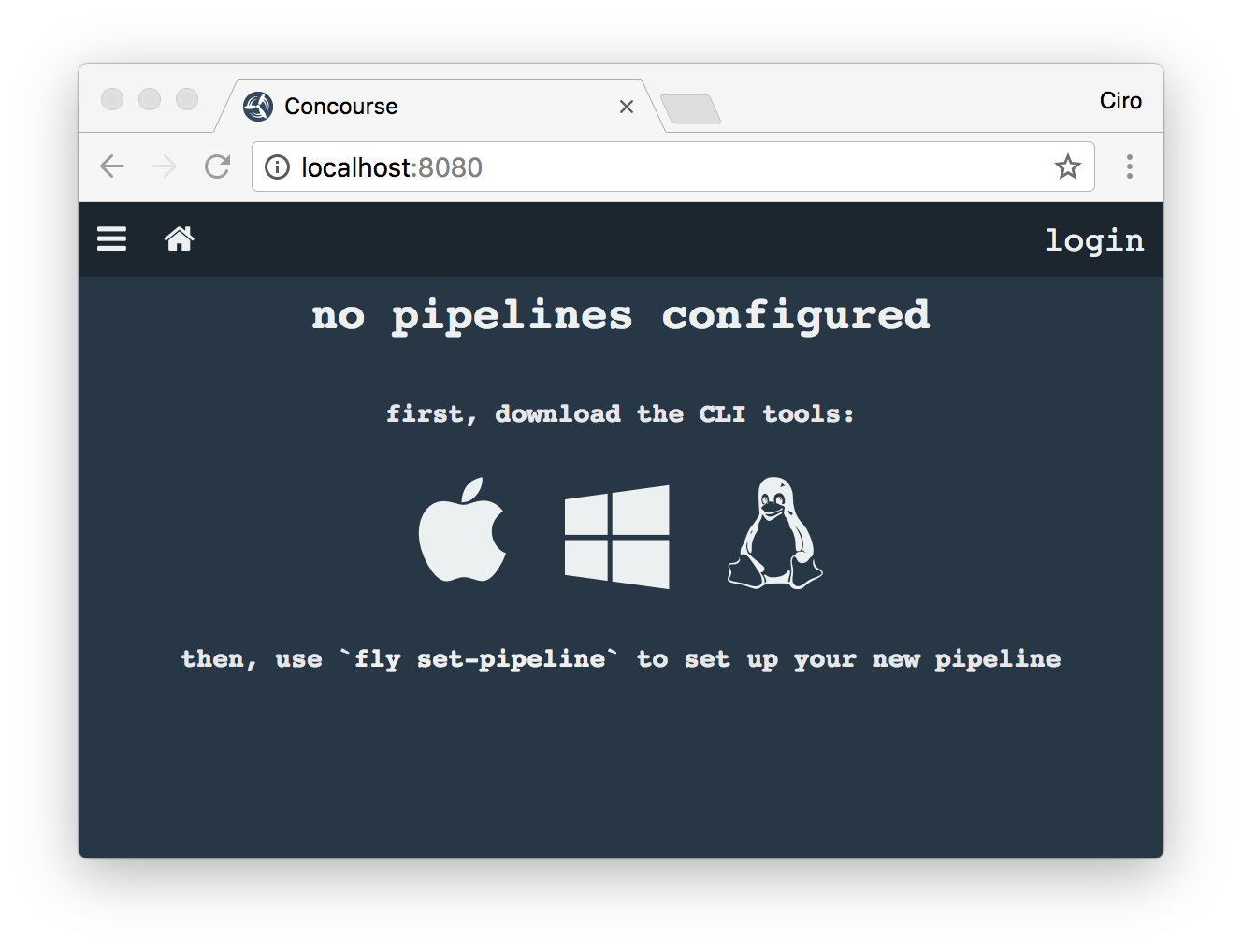

At the first time you shoot localhost:8080 on your browser, no pipeline will show:

That’s because our sample pipeline is not public and we’re not authenticated.

Head to login (http://localhost:8080/login) and just click right in main and login. Because atc has been put up with --no-really-i-dont-want-any-auth, no questions will be asked.

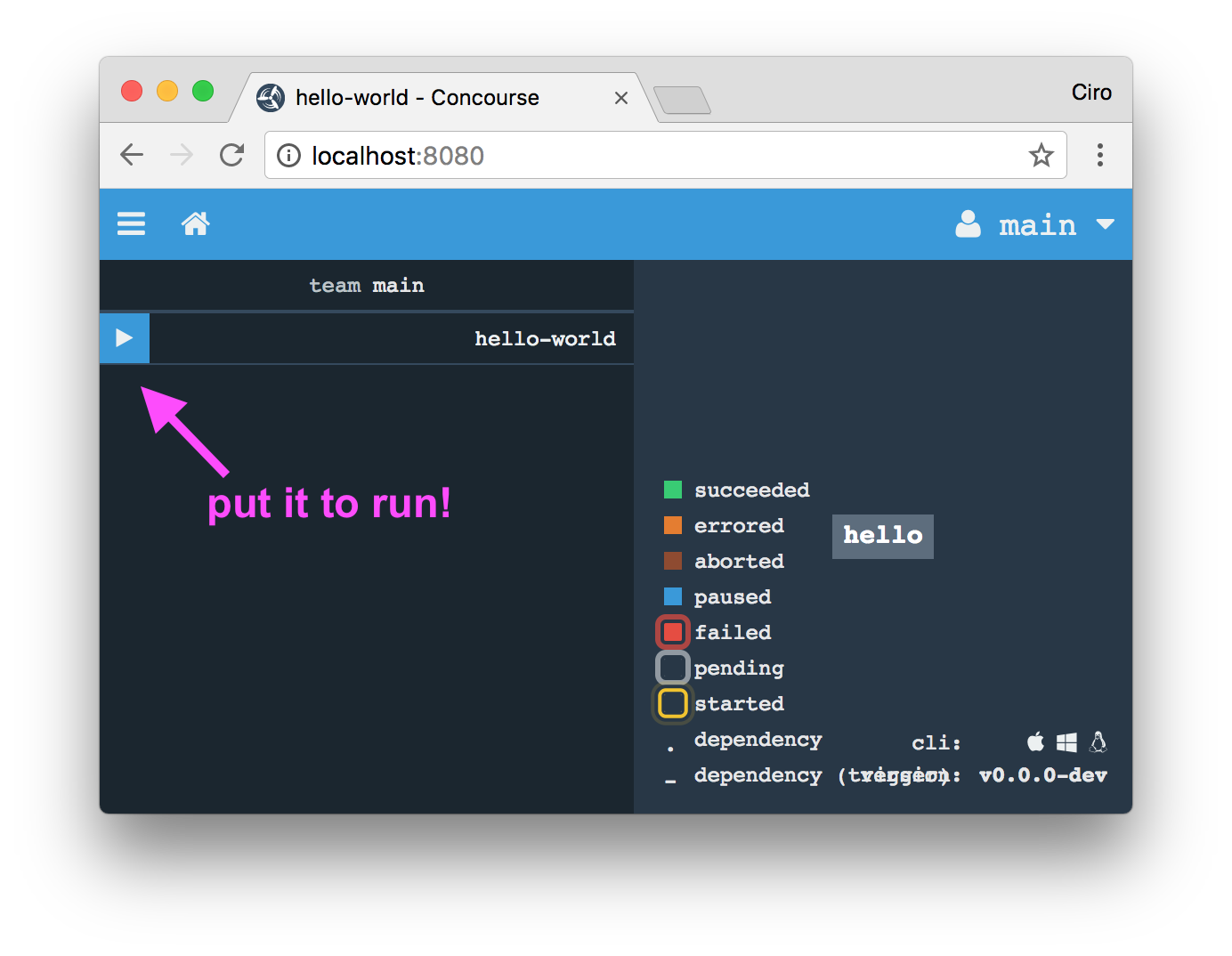

The next step is enabling the pipeline (unpausing it).

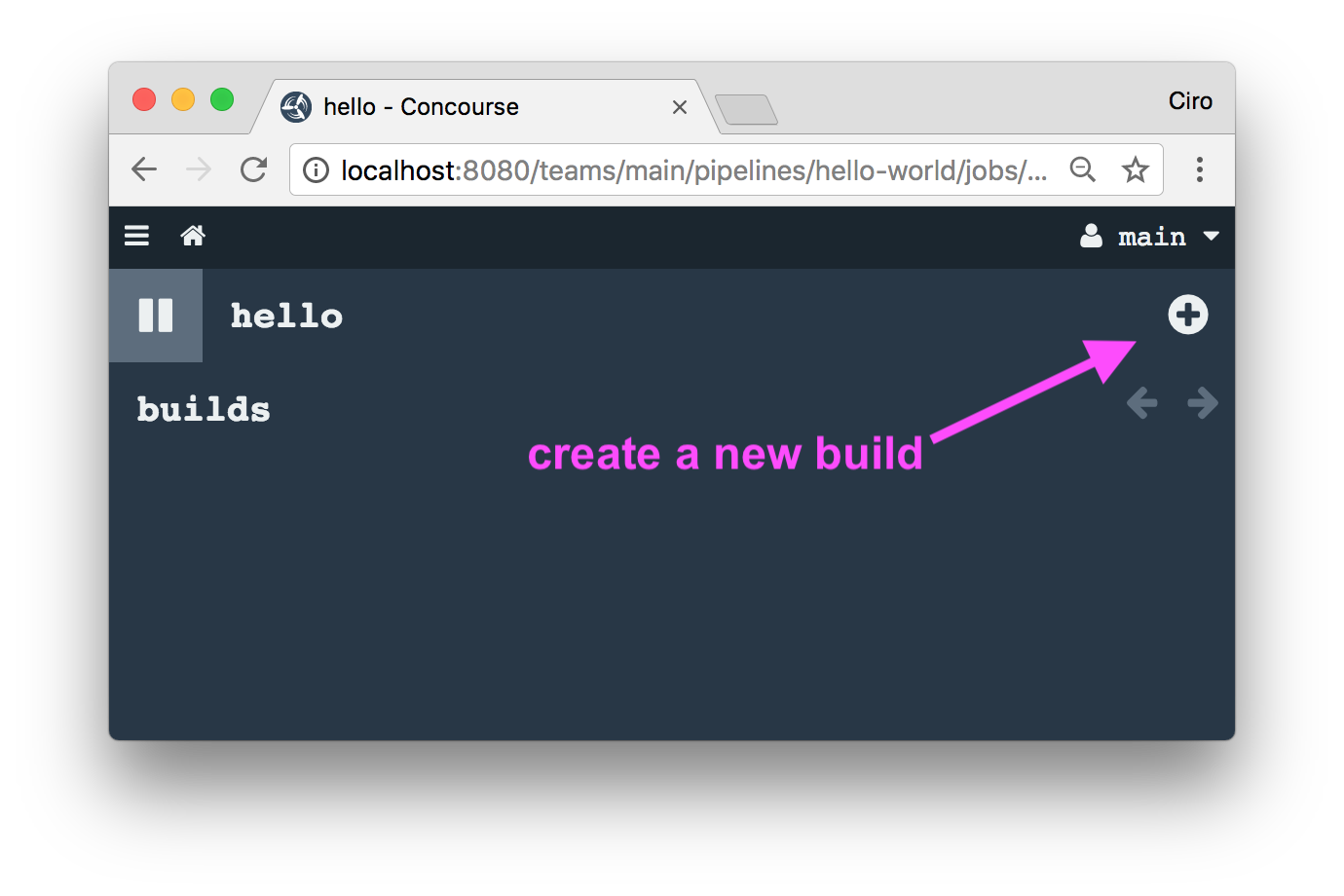

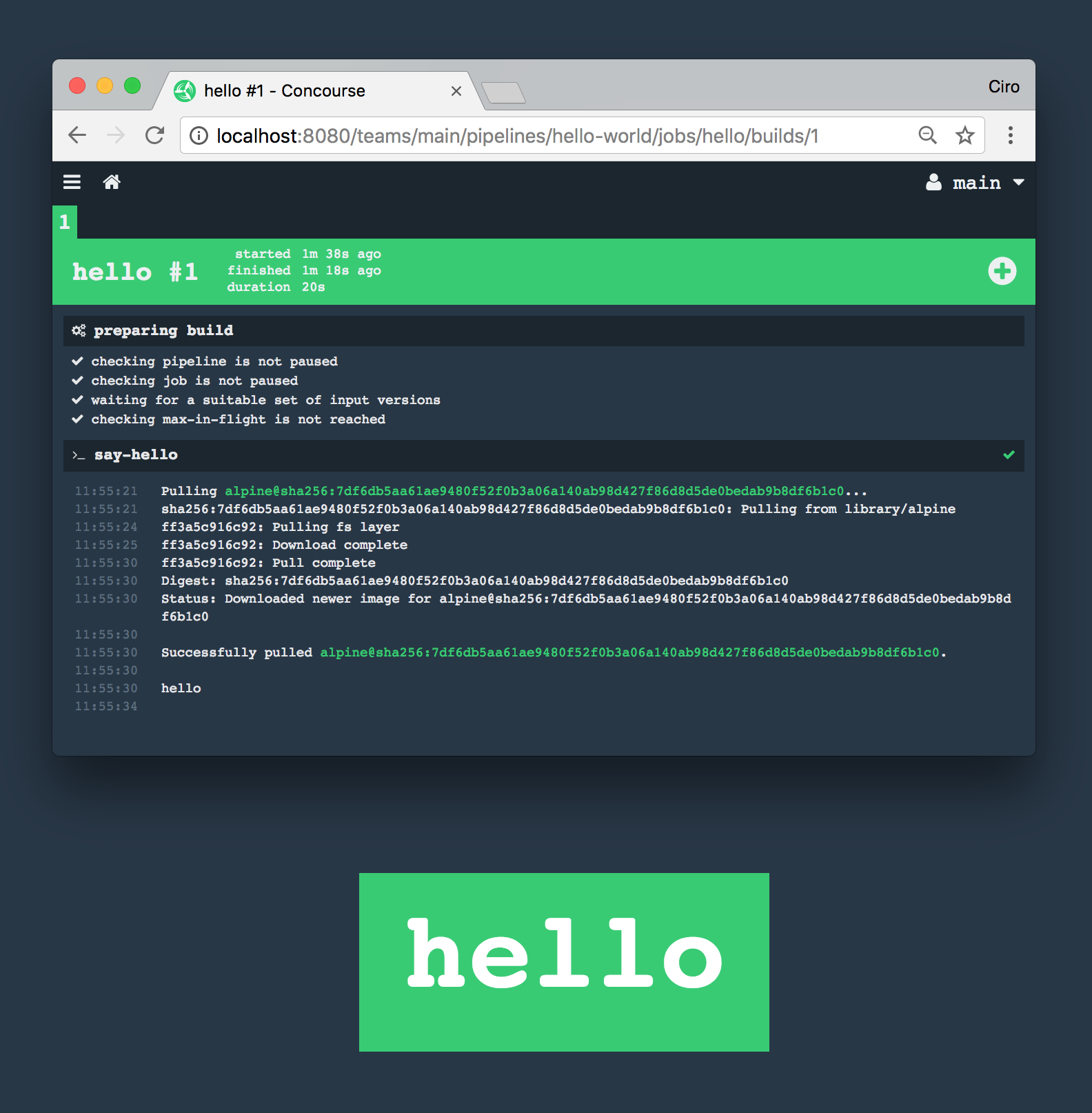

Once that’s done, select the pipeline and start a new build:

It should now be started:

I’m still now very into what’s going on at this moment but my guess is:

atcreceived a build request- given that there’s a worker that registered itself against

tsa,atcschedules the build to that worker (as it matches the constraints -platform == linux) - a container is started at that worker somehow, which then reports back to

atcthe progress of the build - that’s all persisted and also reproduced to the

uisomething.

ps.: I might be totally wrong here - check out the next posts to know if that’s the case!

Some seconds later, we see that it succeeded:

What’s next?

The next step is getting into what’s going on when we create a new pipeline.

Concourse is made of a database component - when we create a new pipeline, how does that interaction look like?

That’s something to be covered next.

Please let me know what you think about Concourse - have you already used it?

I’m cirowrc on Twitter, let me know if you have any questions!

Have a good one,

finis